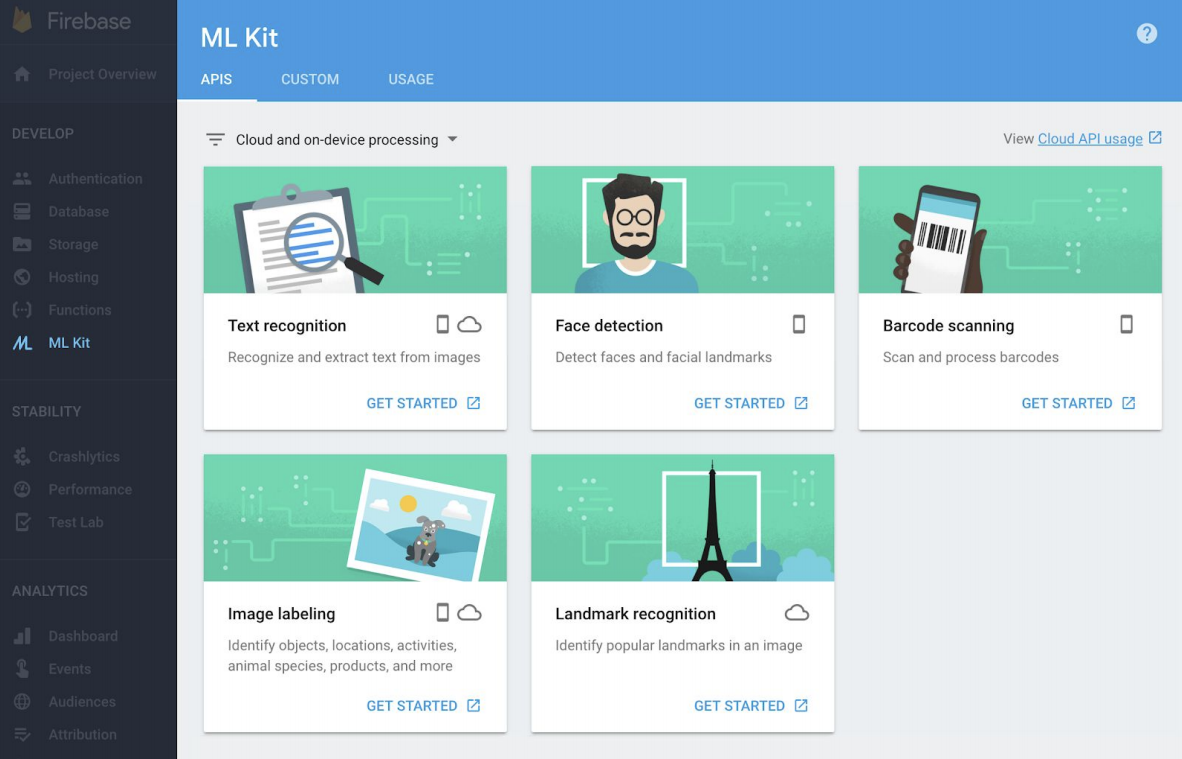

At its I/O developer conference, Google today introduced ML Kit, a new software development kit (SDK) for app developers on iOS and Android that allows them to integrate a number of pre-built Google-provided machine learning models into their apps. One nifty twist here is that these models, which support text recognition, face detection, barcode scanning, image labeling and landmark recognition, are available both on- and offline, depending on network availability and the developer’s preference.

In the coming months, Google plans to extend the current base set of available APIs with two more: one for integrating the same kind of smart replies that you’re probably familiar with from apps like Inbox and Gmail, and a high-density face contour feature for the face detection API.

The real game-changer here are the offline models that developers can integrate into their apps and that they can use for free. Unsurprisingly, there is a tradeoff here. The models that run on the device are smaller and hence offer a lower level of accuracy. In the cloud, neither model size nor available compute power are an issue, so those models are larger and hence more accurate, too.

That’s pretty much standard for the industry. Earlier this year, Microsoft launched its offline neural translations, for example, which can also either run online or on the device. The tradeoff there is the same.

Brahim Elbouchikhi, Google’s group product manager for machine intelligence and the camera lead for Android, told me that a lot of developers will likely do some of the preliminary machine learning inference on the device, maybe to see if there is actual an animal in a picture, and then move to the cloud to detect what breed of dog it actually is. And that makes sense because the on-device image labelling service features about 400 labels while the cloud-based on feature more than 10,000. To power the on-device models, ML Kit uses the standard Neural Networks API on Android and its equivalent on Apple’s iOS.

He also stressed that this is very much a cross-platform product. Developers don’t think of machine learning models as Android- or iOS-specific, after all.

For developers who want to go beyond the pre-trained models, ML Kit also supports TensorFlow Lite models.

As Google rightly notes, getting up to speed with using machine learning isn’t for the faint of heart. This new SDK, which falls under Google’s Firebase brand, is clearly meant to make using machine learning easier for mobile developers. While Google Cloud already offers a number of similar pre-trained and customizable machine learning APIs, those don’t work offline and the experience isn’t integrated tightly with Firebase and the Firebase Console either, which is quickly becoming Google’s go-to hub for all things mobile development.

Even for custom TensorFlow Lite models, Google is working on compressing the models to a more workable size. For now, this is only an experiment, but developers who want to give it a try can sign up here.

Overall, Elbouchikhi argues, the work here is about democratizing machine learning. “Our goal is to make machine learning just another tool,” he said.