Google researchers know how much people like to trick others into thinking they’re on the moon, or that it’s night instead of day, and other fun shenanigans only possible if you happen to be in a movie studio in front of a green screen. So they did what any good 2018 coder would do: build a neural network that lets you do it.

This “video segmentation” tool, as they call it (well, everyone does) is rolling out to YouTube Stories on mobile in a limited fashion starting now — if you see the option, congratulations, you’re a beta tester.

A lot of ingenuity seems to have gone into this feature. It’s a piece of cake to figure out where the foreground ends and the background begins if you have a depth-sensing camera (like the iPhone X’s front-facing array) or plenty of processing time and no battery to think about (like a desktop computer).

On mobile, though, and with an ordinary RGB image, it’s not so easy to do. And if doing a still image is hard, video is even more so, since the computer has to do the calculation 30 times a second at a minimum.

On mobile, though, and with an ordinary RGB image, it’s not so easy to do. And if doing a still image is hard, video is even more so, since the computer has to do the calculation 30 times a second at a minimum.

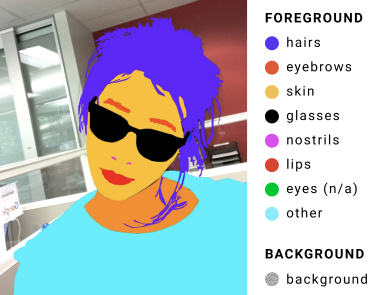

Well, Google’s engineers took that as a challenge, and set up a convolutional neural network architecture, training it on thousands of labelled images like the one to the right.

The network learned to pick out the common features of a head and shoulders, and a series of optimizations lowered the amount of data it needed to crunch in order to do so. And — although it’s cheating a bit — the result of the previous calculation (so, a sort of cutout of your head) gets used as raw material for the next one, further reducing load.

The result is a fast, relatively accurate segmentation engine that runs more than fast enough to be used in video — 40 frames per second on the Pixel 2 and over 100 on the iPhone 7 (!).

This is great news for a lot of folks — removing or replacing a background is a great tool to have in your toolbox and this makes it quite easy. And hopefully it won’t kill your battery.

This is great news for a lot of folks — removing or replacing a background is a great tool to have in your toolbox and this makes it quite easy. And hopefully it won’t kill your battery.