Machine learning may be the tool de jour for everything from particle physics to recreating the human voice, but it’s not exactly the easiest field to get into. Despite the complexities of video editing and sound design, we have UIs that let even a curious kid dabble in them — why not with machine learning? That’s the goal of Lobe, a startup and platform that genuinely seems to have made AI models as simple to put together as LEGO bricks.

I talked with Mike Matas, one of Lobe’s co-founders and the designer behind many a popular digital interface, about the platform and his motivations for creating it.

“There’s been a lot of situations where people have kind of thought about AI and have these cool ideas, but they can’t execute them,” he said. “So those ideas just like shed, unless you have access to an AI team.”

This happened to him, too, he explained.

“I started researching because I wanted to see if I could use it myself. And there’s this hard to break through veneer of words and frameworks and mathematics — but once you get through that the concepts are actually really intuitive. In fact even more intuitive than regular programming, because you’re teaching the machine like you teach a person.”

But like the hard shell of jargon, existing tools were also rough on the edges — powerful and functional, but much more like learning a development environment than playing around in Photoshop or Logic.

“You need to know how to piece these things together, there are lots of things you need to download. I’m one of those people who if I have to do a lot of work, download a bunch of frameworks, I just give up,” he said. “So as a UI designer I saw the opportunity to take something that’s really complicated and reframe it in a way that’s understandable.”

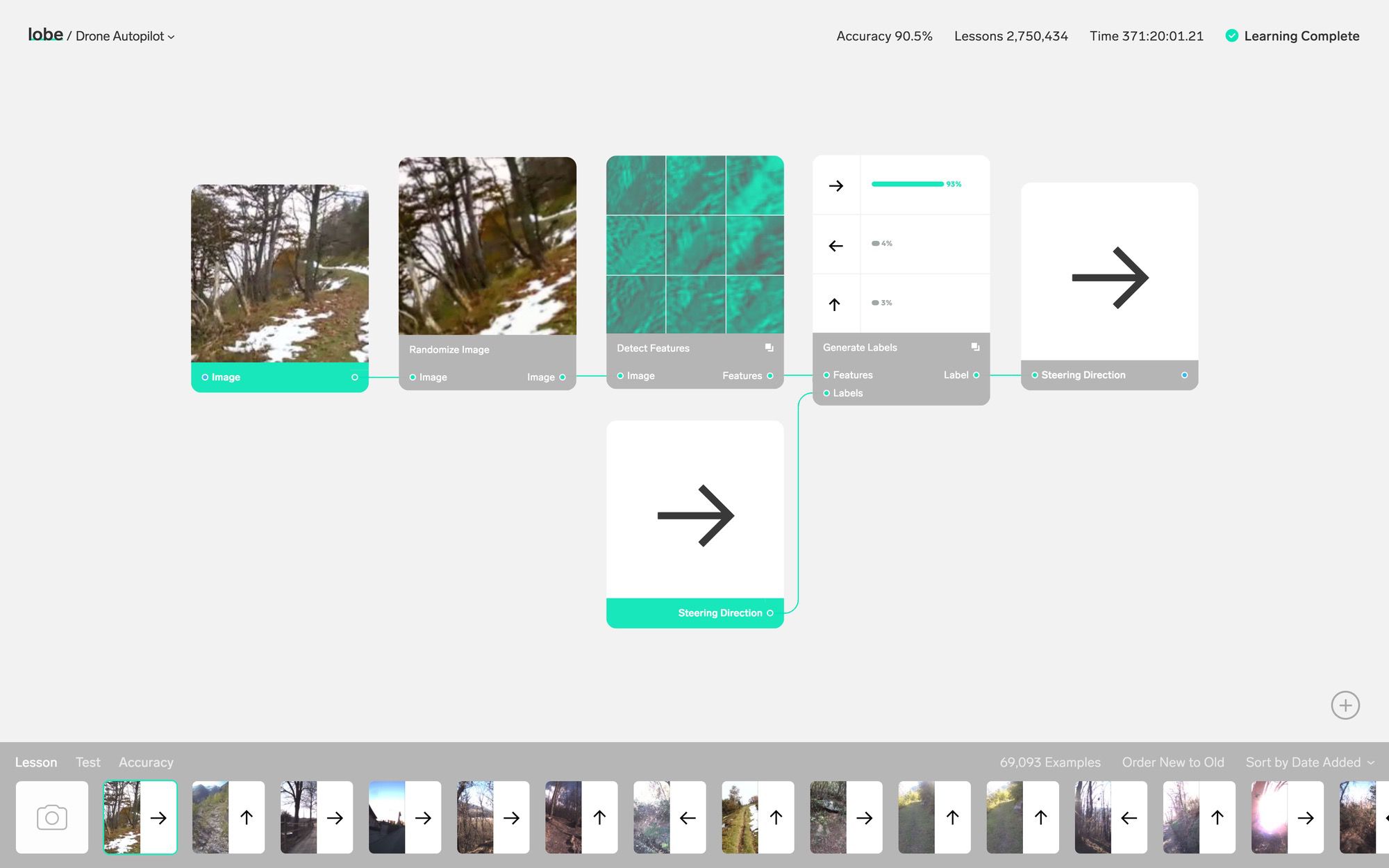

Lobe, which Matas created with his co-founders Markus Beissinger and Adam Menges, takes the concepts of machine learning, things like feature extraction and labeling, and puts them in a simple, intuitive visual interface. As demonstrated in a video tour of the platform, you can make an app that recognizes hand gestures and matches them to emoji without ever seeing a line of code, let alone writing one. All the relevant information is there, and you can drill down to the nitty gritty if you want, but you don’t have to. The ease and speed with which new applications can be designed and experimented with could open up the field to people who see the potential of the tools but lack the technical know-how.

He compared the situation to the early days of PCs, when computer scientists and engineers were the only ones who knew how to operate them. “They were the only people able to use them, so they were they only people able to come up with ideas about how to use them,” he said. But by the late ’80s, computers had been transformed into creative tools, largely because of improvements to the UI.

Matas expects a similar flood of applications, even beyond the many we’ve already seen, as the barrier to entry drops.

“People outside the data science community are going to think about how to apply this to their field,” he said, and unlike before, they’ll be able to create a working model themselves.

A raft of examples on the site show how a few simple modules can give rise to all kinds of interesting applications: reading lips, tracking positions, understanding gestures, generating realistic flower petals. Why not? You need data to feed the system, of course, but doing something novel with it is no longer the hard part.

A raft of examples on the site show how a few simple modules can give rise to all kinds of interesting applications: reading lips, tracking positions, understanding gestures, generating realistic flower petals. Why not? You need data to feed the system, of course, but doing something novel with it is no longer the hard part.

And in keeping with the machine learning community’s commitment to openness and sharing, Lobe models aren’t some proprietary thing you can only operate on the site or via the API. “Architecturally we’re built on top of open standards like Tensorflow,” Matas said. Do the training on Lobe, test it and tweak it on Lobe, then compile it down to whatever platform you want and take it to go.

Right now the site is in closed beta. “We’ve been overwhelmed with responses, so clearly it’s resonating with people,” Matas said. “We’re going to slowly let people in, it’s going to start pretty small. I hope we’re not getting ahead of ourselves.”