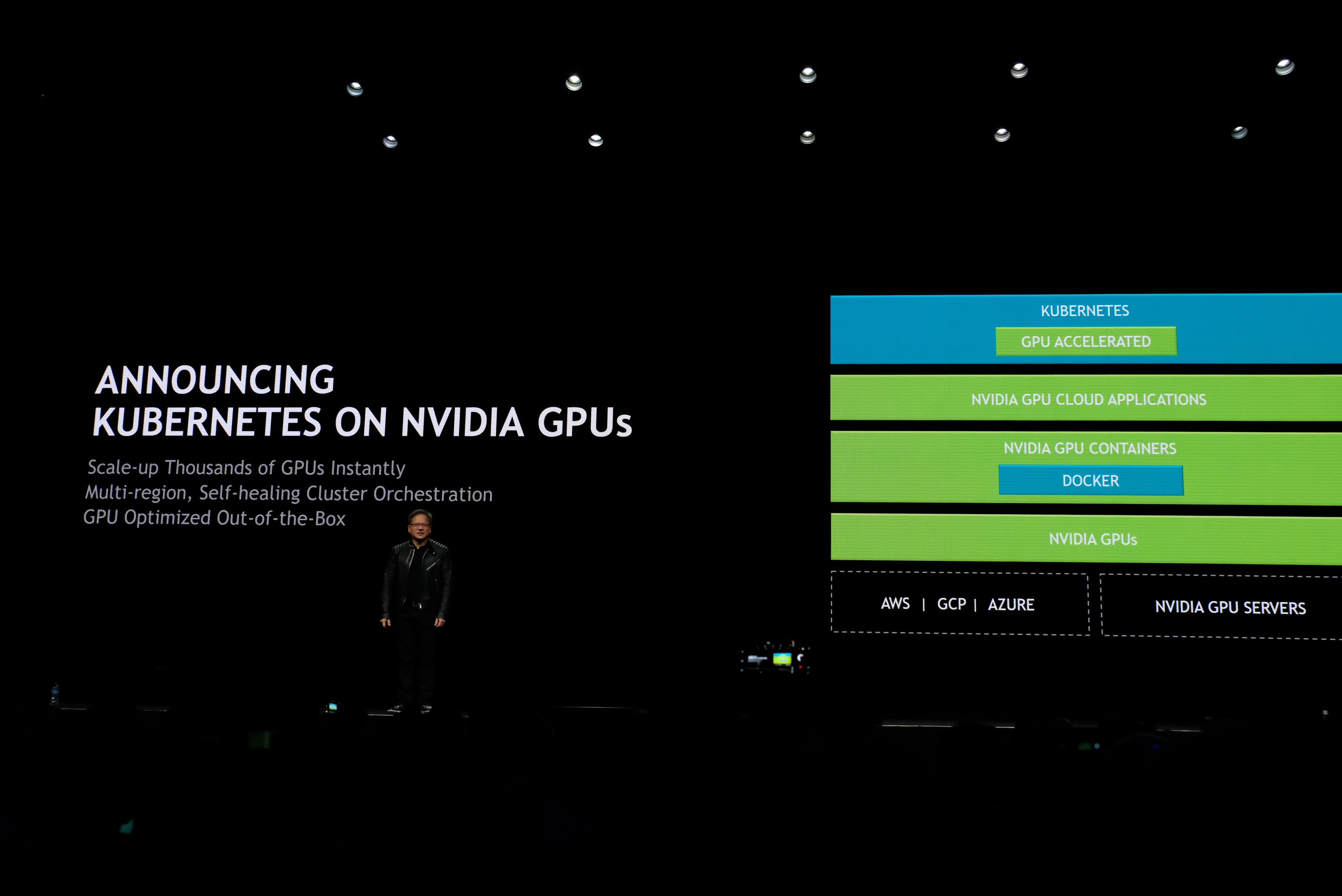

This has been a long time coming, but during his GTC keynote, Nvidia CEO Jensen Huang today announced support for the Google-incubated Kubernetes container orchestration system on Nvidia GPUs.

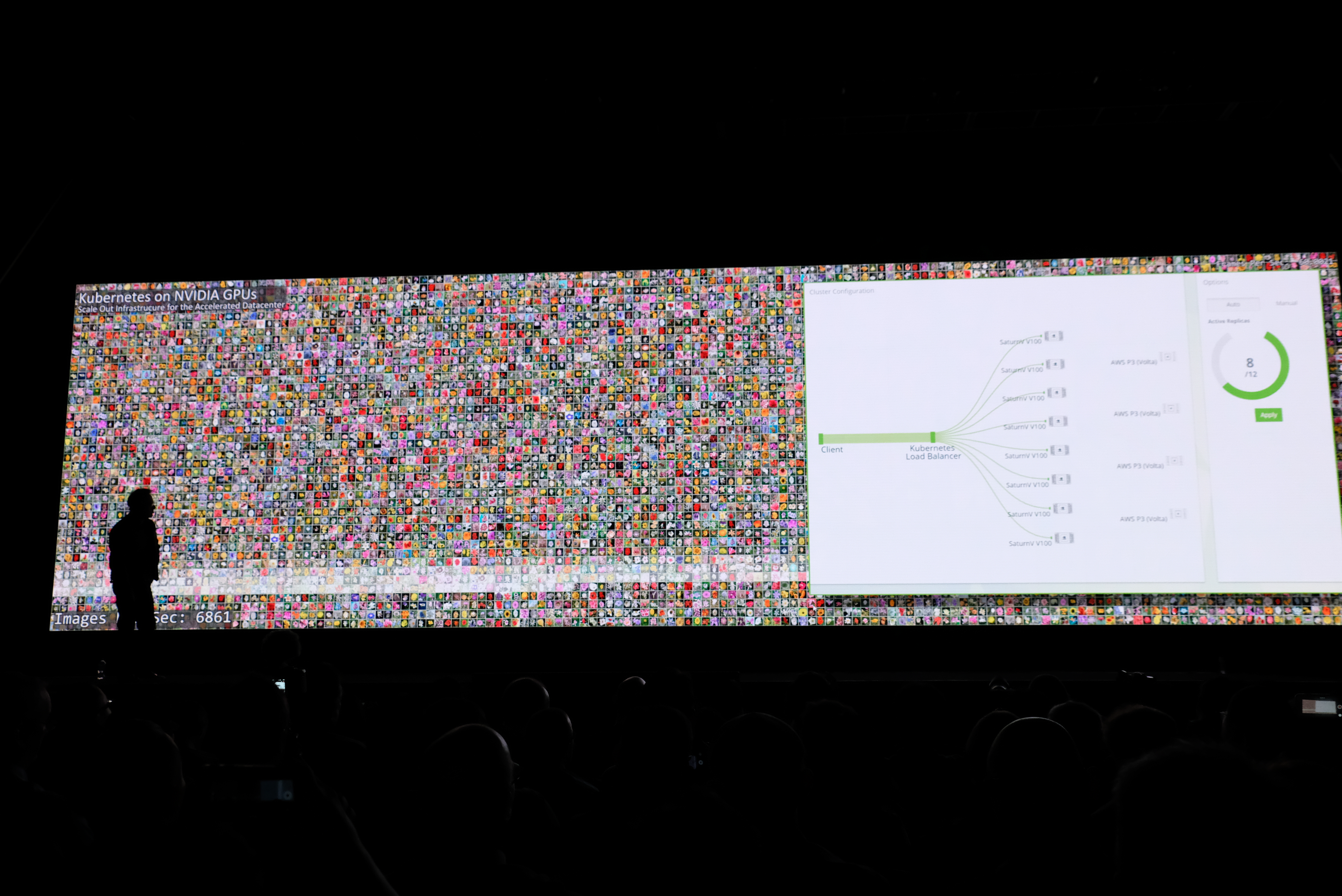

The idea here is to optimize the use of GPUs in hyperscale data centers — the kind of environments where you may use hundreds or thousands of GPUs to speed up machine learning processes — and to allow developers to take these containers to multiple clouds without having to make any changes.

“Now that we have all these accelerated frameworks and all this accelerated code, how do we deploy it into the world of data centers?,” Jensen asked. “Well, it turns out there is this thing called Kubernetes. […] This is going to bring so much joy. So much joy.”

Nvidia is contributing its GPU enhancements to the Kubernetes open source community. Machine learning workloads tend to be massive, both in terms of the computation that’s needed and the data that drives it. Kubernetes helps orchestrate these workloads and with this update, the orchestrator is now GPU-aware.

“Kubernetes is now GPU aware. The Docker container is now GPU accelerated. You’ve got all of the frameworks that I talked about which are GPU accelerated. And now you’ve got all these inference workloads which are GPU accelerated. And you’ve got Nvidia GPUs in all these clouds. And you’ve got this wonderful system orchestration layer called Kubernetes. Life is complete,” said Huang (to laughter from the audience).

It’s worth noting that Kubernetes already had some support for GPU acceleration before today’s announcement. Google, for example, already supports GPU’s in its Kubernetes Engine.