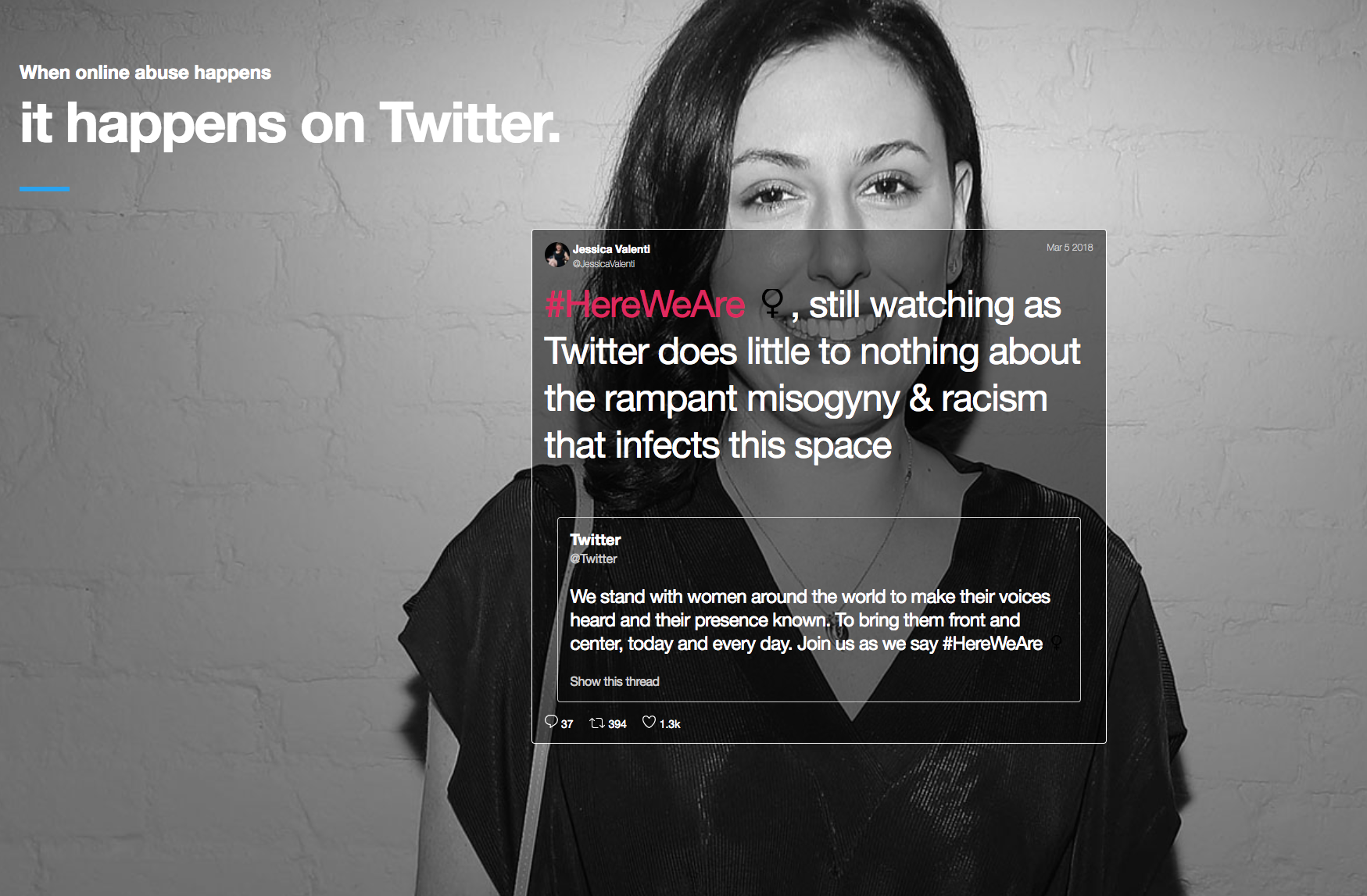

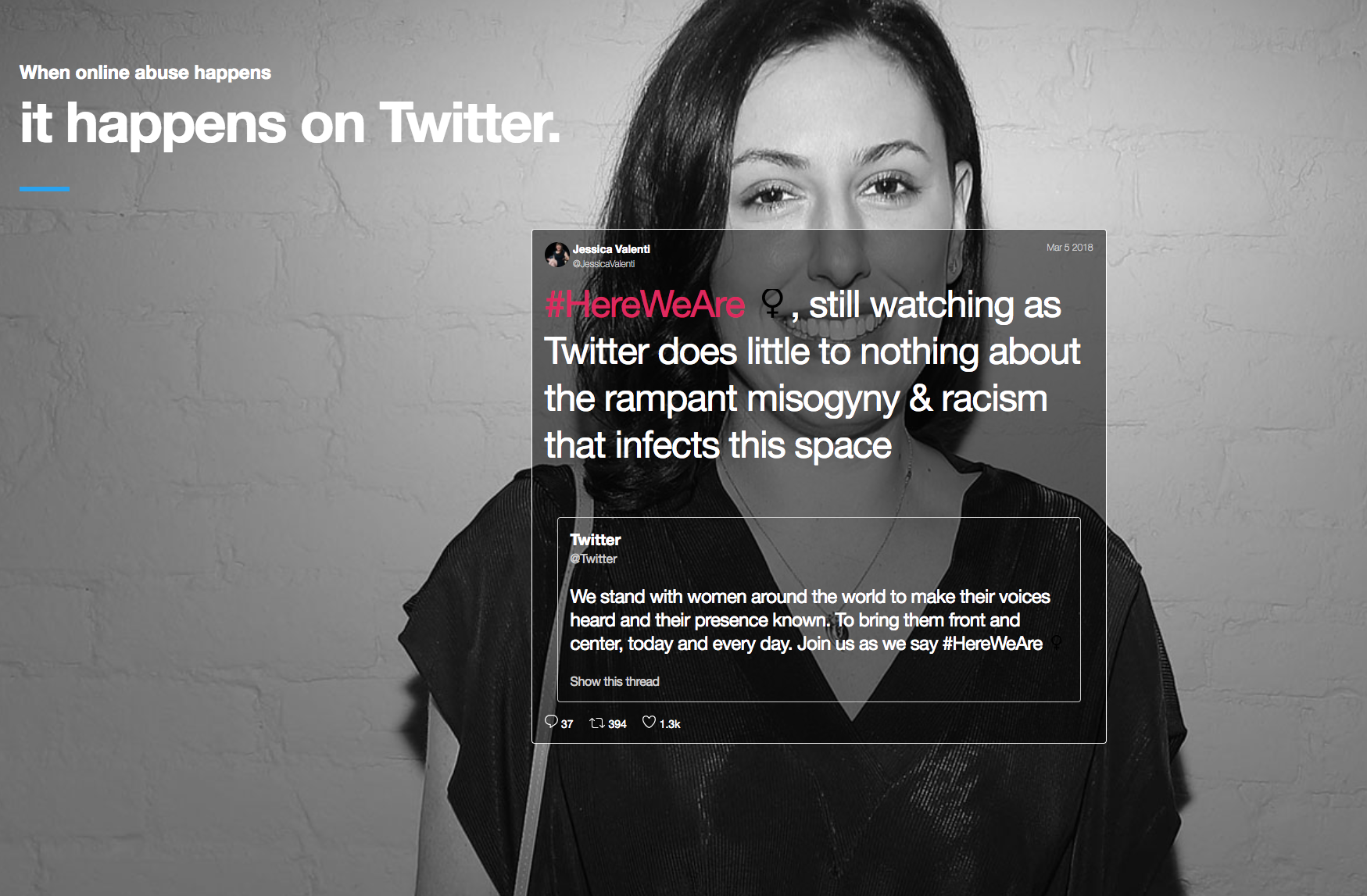

Twitter has found itself under fire again. This time, it’s coming from Amnesty International, a non-governmental organization that focuses on human rights. Amnesty International’s new report, “#ToxicTwitter: Violence and abuse against women online,” details Twitter’s failures to ensure safety online and prevent violence and abuse toward women. What Amnesty International is trying to achieve with this report, the organization’s technology and human rights researcher Azmina Dhrodia told TechCrunch, is to look at why and how this is a human rights issue.

By framing it as a human rights issue, Amnesty International says it hopes to be able to push Twitter to enforce its own policies consistently and be transparent about how it’s doing so.

“Twitter’s failure to adequately and consistently enforce their own policies is leading women to either silence or censor themselves online,” Dhrodia told me. “So women are either leaving the platform, they’re thinking five or six times over before they post anything, they’re taking social media breaks. They’re coming up with a whole bunch of different coping mechanisms in order to avoid violence and abuse because they know by speaking out, it’s not going to be dealt with.”

Although Twitter CEO Jack Dorsey has publicly said the company is looking for help to address its issues around safety, Amnesty International says Twitter has declined to provide the organization with any “meaningful data on how the company responds to reports of violence and abuse.”

This report comes after Amnesty International’s 14-month investigation that combined quantitative and qualitative research. The report is based on interviews with 86 women and non-binary people, including journalists, politicians and everyday users across the U.S. and the UK about their experiences online.

“When talking to them about their experience of violence and abuse, Twitter came up consistently as the platform where most women had experienced violence and abuse and also where they felt it was the company that was doing the least to remedy the issue,” Dhrodia said.

The report goes on to outline some recommendations for Twitter moving forward. The first is to share specific examples of the type of violence and abuse Twitter won’t tolerate. Another is to share data on how quickly Twitter responds to reports of abuse, while another is to ensure its decisions to restrict certain content are consistent with international human rights law.

Earlier this month, Twitter began soliciting proposals from the public to help the platform capture, measure and evaluate healthy interactions. The goal is to come up with metrics to measure the health of the interactions on Twitter. But Twitter eventually wants to take that a step further, Dorsey said in a public conversation via Periscope.

“Ultimately we want to have a measurement of how it affects the broader society and public health, but also individual health, as well,” Dorsey said.

As Twitter embarks on its journey to make its platform a safer, more productive place for everyone, it’s relying on third parties to step in to determine the best ways to capture, measure and evaluate health metrics. Perhaps, more importantly, Twitter needs help determining exactly what those metrics entail.

I’ve reached out to Twitter and will update this story if I hear back.