YouTube says it’s rolling out more tools to help its creators make money from their videos. The changes are meant to address creators’ complaints over YouTube’s new monetization policies announced earlier this year. Those policies were designed to make the site more advertiser-friendly following a series of controversies over video content from top creators, including videos from Logan Paul, who had filmed a suicide victim, and PewDiePie, who repeatedly used racial slurs, for example.

The company then decided to set a higher bar to join its YouTube Partner Program, which is what allows video publishers to make money through advertising. Previously, creators only needed 10,000 total views to join; they now need at least 1,000 subscribers and 4,000 hours of view time over the past year to join. This resulted in wide-scale demonetization of videos that previously relied on ads.

The company has also increased policing of video content in recent months, but its systems haven’t always been accurate.

YouTube said in February it was working on better systems for reviewing video content when a video is demonetized over its content. One such change, enacted at the time, involved the use of machine learning technology to address misclassifications of videos related to this policy. This, in turn, has reduced the number of appeals from creators who want a human review of their video content instead.

According to YouTube CEO Susan Wojcicki, the volume of appeals is down by 50 percent as a result.

Wojcicki also announced another new program related to video monetization which is launching into pilot testing with a small number of creators starting this month.

This system will allow creators to disclose, specifically, what sort of content is in their video during the upload process, as it relates to YouTube’s advertiser-friendly guidelines.

“In an ideal world, we’ll eventually get to a state where creators across the platform are able to accurately represent what’s in their videos so that their insights, combined with those of our algorithmic classifiers and human reviewers, will make the monetization process much smoother with fewer false positive demonetizations,” said Wojcicki.

Essentially, this system would rely on self-disclosure regarding content, which would then be factored in as another signal for YouTube’s monetization algorithms to consider. This was something YouTube had also said in February was in the works.

Because not all videos will be brand-safe or meet the requirements to become a YouTube Partner, YouTube now says it will also roll out alternative means of making money from videos.

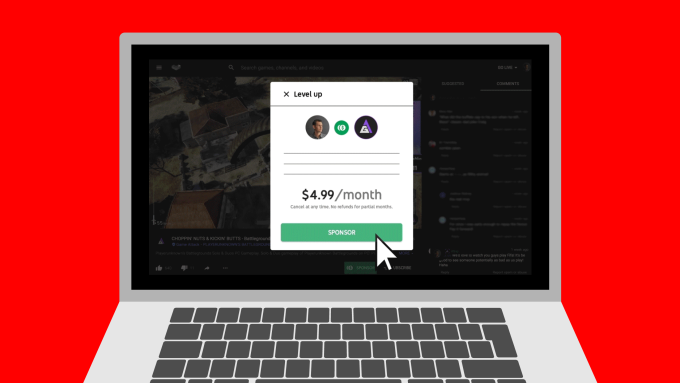

This includes an expansion of “sponsorships,” which have been in testing since last fall with a select group of creators.

Similar to Twitch subscriptions, sponsorships were introduced to the YouTube Gaming community as a way to support favorites creators through monthly subscriptions (at $4.99/mo), while also receiving various perks like custom emoji and a custom badge for live chat.

Now YouTube says “many more creators” will gain access to sponsorships in the months ahead, but it’s not yet saying how those creators will be selected, or if they’ll have to meet certain requirements, as well. It’s also unclear if YouTube will roll these out more broadly to its community, outside of gaming.

Wojcicki gave updates on various other changes YouTube has enacted in recent months. For example, she said that YouTube’s new moderation tools have led to a 75-plus percent decline in comment flags on channels, where enabled, and these will now be expanded to 10 languages. YouTube’s newer social network-inspired Community feature has also been expanded to more channels, she noted.

The company also patted itself on the back for its improved communication with the wider creator community, saying that this year it has increased replies by 600 percent and improved its reply rate by 75 percent to tweets addressed to its official handles: @TeamYouTube, @YTCreators, and @YouTube.

While that may be true, it’s notable that YouTube isn’t publicly addressing the growing number of complaints from creators who – rightly or wrongly – believe their channel has been somehow “downgraded” by YouTube’s recommendation algorithms, resulting in declining views and loss of subscribers.

This is the issue that led the disturbed individual, Nasim Najafi Aghdam, to attack YouTube’s headquarters earlier this month. Police said that Aghdam, who shot at YouTube employees before killing herself, was “upset with the policies and practices of YouTube.”

It’s obvious, then, why YouTube is likely proceeding with extreme caution when it comes to communicating its policy changes, and isn’t directly addressing complaints similar to Aghdam’s from others in the community.

But the creator backlash is still making itself known. Just read the Twitter replies or comment thread on Wojcicki’s announcement. YouTube’s smaller creators feel they’ve been unfairly punished because of the misdeeds of a few high-profile stars. They’re angry that they don’t have visibility into why their videos are seeing reduced viewership – they only know that something changed.

YouTube glosses over this by touting the successes of its bigger channels.

“Over the last year, channels earning five figures annually grew more than 35 percent, while channels earning six figures annually grew more than 40 percent,” Wojcicki said, highlighting YouTube’s growth.

In fairness, however, YouTube is in a tough place. Its site became so successful over the years, that it became impossible for it to police all the uploads manually. At first, this was the cause for celebration and the chance to put Google’s advanced engineering and technology to work. But these days, as with other sites of similar scale, the challenging of policing bad actors among billions of users, is becoming a Herculean task – and one companies are failing at, too.

YouTube’s over-reliance on algorithms and technology has allowed for a lot of awful content to see daylight – including inappropriate videos aimed a children, disturbing videos, terrorist propaganda, hate speech, fake news and conspiracy theories, unlabeled ads disguised as product reviews or as “fun” content, videos of kids that attract pedophiles, and commenting systems that allowed for harassment and trolling at scale.

To name a few.

YouTube may have woken up late to its numerous issues, but it’s not ignorant of them, at least.

“We know the last year has not been easy for many of you. But we’re committed to listening and using your feedback to help YouTube thrive,” Wojcicki said. “While we’re proud of this progress, I know we have more work to do.”

That’s putting it mildly.